|

Size: 11312

Comment:

|

Size: 17991

Comment:

|

| Deletions are marked like this. | Additions are marked like this. |

| Line 307: | Line 307: |

= Under Construction = EMAN2 is built with `conda-build` using binaries from https://anaconda.org, packaged into an installer with [[https://github.com/cryoem/constructor.git|constructor]] as of '''v2.2'''. 1. [[https://github.com/conda/conda|conda]] is the package manager. 1. https://anaconda.org is the online repository of binaries. 1. [[https://github.com/conda/conda-build|conda-build]] is the tool to build from source. 1. [[https://github.com/cryoem/constructor.git|constructor]] is the tool to package eman2 and dependency binaries into a single installer file. EMAN2 is distributed as a single installer which includes all its dependencies. However, EMAN2 is not available as a conda-package on https://anaconda.org. In other words it is not possible to install EMAN2 by typing {{{conda install eman2}}}. == Conda == Packages that are available on https://anaconda.org can be installed into any conda environment by issuing the command {{{conda install <package>}}}. Conda installs the package along with its dependencies. In order for packages to benefit from this automation, they need to be packaged in a specific way. That can be done with {{{conda-build}}}. {{{conda-build}}} builds packages according to instructions provided in a {{{recipe}}}. A recipe consists of a file with package metadata, {{{meta.yaml}}}, and any other necessary resources like build scripts, ({{{build.sh}}}, {{{bld.bat}}}), patches and so on. == Recipes, Feedstocks and anaconda.org channel: cryoem == Most of EMAN2 dependencies can be found on anaconda's channels, {{{defaults}}} and {{{conda-forge}}}. A few that do not exist or need to be customized have been built and uploaded to channel [[https://anaconda.org/cryoem/dashboard|cryoem]]. The recipes are hosted in separate repositories on [[https://github.com/cryoem/|GitHub]]. Every recipe repository follows the feedstock approach of [[http://conda-forge.github.io/|conda-forge]]. See [[https://github.com/cryoem?utf8=%E2%9C%93&q=-feedstock&type=&language=|here]] for a complete list. == Build Strategies == It is possible to utilize conda for building and installing EMAN2 in a few ways. One way is to just install binaries with conda and point to the right locations of dependencies during cmake configuration. Another way is to make use of the newly added features in EMAN2's cmake which find the dependencies automatically. These features are activated only when the build is performed by {{{conda-build}}}. CMake knows the build is a {{{conda-build}}} build only through an environment variable. So, it is possible to set the specific environment variable manually and still activate those features without actually using {{{conda-build}}}. Third way is to use a recipe to run {{{conda-build}}}. Basic instructions for all three strategies follow. === 1. Use conda for binaries only === 1. Install dependencies a. Manually with {{{ conda install <package> }}} a. Or with a single command{{{ conda install eman-deps -c cryoem -c defaults -c conda-forge }}} 2. Build and install EMAN2 manually into '''home directory''' with {{{cmake}}}, {{{make}}} and {{{make install}}}. 3. Resulting installation is under '''$HOME/EMAN2''' by default. This is detailed on [[http://blake.bcm.edu/emanwiki/EMAN2/COMPILE_EMAN2_MAC_OS_X|EMAN WIKI]]. Also, see [[https://github.com/cryoem/eman2/blob/master/ci_support/build_no_envars.sh|build_no_envars.sh]]. === 2. Use conda to install EMAN2 into a conda environment === 1. Install dependencies a. Manually with {{{ conda install <package> }}} a. Or with a single command{{{ conda install eman-deps -c cryoem -c defaults -c conda-forge }}} 2. Set environment variables. {{{ export CONDA_BUILD_STATE=BUILD export PREFIX=<path-to-anaconda-installation-directory> # $HOME/miniconda2/ or $HOME/anaconda2/ export SP_DIR=$PREFIX/lib/python2.7/site-packages }}} 3. Build and install EMAN2 manually into '''conda environment''' with {{{cmake}}}, {{{make}}} and {{{make install}}}. See [[https://github.com/cryoem/eman2/blob/master/ci_support/build_with_envars.sh\|build_with_envars.sh]]. 4. Resulting installation is under Anaconda/Miniconda installation, '''$HOME/anaconda2/''' or '''$HOME/miniconda2/''' by default. === 3. Use recipe for a fully automated conda build === {{{ conda build <path-to-eman-recipe-directory> conda install eman2 --use-local -c cryoem -c defaults -c conda-forge }}} Resulting installation is under Anaconda/Miniconda installation, '''$HOME/anaconda2/''' or '''$HOME/miniconda2/''' by default. ''':TODO:''' Advantages/disadvantages/comparison of the strategies. == Tests == The build strategies described in section Build Strategies are tested on CI (Continuous Integration) servers for MacOSX ([[https://travis-ci.org/cryoem/eman2/builds|TravisCI]]) and Linux ([[https://circleci.com/gh/cryoem/eman2|CircleCI]]). For Windows (Appveyor), only the recipe strategy is tested. The tests are triggered for every commit that is pushed to GitHub. === Local Build Tests and Automated Daily Snapshot Binaries with Jenkins === :TODO: Jenkins. == Binary Distribution == === Constructor === Packaging is done with {{{constructor}}}, a tool for making installers from conda packages. In order to slightly customize the installers the project was forked. The customized project is at https://github.com/cryoem/constructor. The input files for {{{constructor}}} are maintained at --(https://github.com/cryoem/docker-images )-- https://github.com/cryoem/build-scripts. The installer has additional tools like {{{conda}}}, {{{conda-build}}}, {{{pip}}} bundled. The installer is setup so that the packages are kept in the installed EMAN2 conda environment cache for convenience. === Build Machines === The binary packages are built on three physical machines. The operating systems are Mac OSX 10.10, CentOS 7 and Windows 10. CentOS 6 binaries are built in a CentOS 6 docker container on the CentOS 7 machine. Various build related scripts are on https://github.com/cryoem/build-scripts. ==== Cron ==== :TODO: ==== Windows ==== [Windows] ==== Docker ==== Docker images and helper scripts are at --(https://github.com/cryoem/docker-images )-- https://github.com/cryoem/build-scripts. Command to run docker with GUI support, CentOS7: {{{ xhost + local:root docker run -it -v /tmp/.X11-unix:/tmp/.X11-unix -e DISPLAY=unix$DISPLAY cryoem/eman-nvidia-cuda8-centos7 # When done with eman xhost - local:root }}} :FIXME: Runs as root on Linux. `chown` doesn't work, the resulting installer has root ownership. |

Contents

Jenkins

Login info Jobs Settings, Plugins Binary builds require conda-build, constructor

Feedstocks

eman-dev eman-deps pydusa

General instructions Existing feedstocks Files to edit: recipe/, conda-build.yaml, conda-forge.yaml conda create -n smithy conda-smithy -c conda-forge conda-smithy rerender More info in conda-smithy/README.md, conda smithy -h, conda-forge.org/docs New feedstocks conda-smithy/README.md, conda smithy -h

Docker ?

Build System Notes

CMake

- Dependency binaries are pulled from Anaconda. CMake uses conda environment location to find packages.

Make targets can be listed with make help. Some convenience targets are:

$ make help The following are some of the valid targets for this Makefile: ... ..... ... ..... ... PythonFiles ... test-rt ... test-py-compile ... test-verbose-broken ... test-progs ... test-verbose ... ..... ... .....

libpython can be linked statically or dynamically when python is built. It is important for python extensions to be aware of the type of linking in order to avoid segfaults. This can be accomplished by querying Py_ENABLE_SHARED.

1 python -c "import sysconfig; print(sysconfig.get_config_var('Py_ENABLE_SHARED'))"In EMAN, it is done in cmake/FindPython.cmake

OpenGL detection when Anaconda's compilers are used is done using a cmake toolchain file.

- glext.h file needed for OpenGL related module compilation is already present on Linux and Mac. On Windows, it is manually copied once into C:\Program Files\Microsoft SDKs\Windows\v6.0A\Include\gl. On Appveyor it is downloaded as part of env setup every time a test is run.

- Compiler warnings are turned off by default and can be turned on by setting ENABLE_WARNINGS=ON

1 cmake <source-dir> -DENABLE_WARNINGS=ON Setting compiler and linker options by include_directories, add_definitions that have global affects are avoided and target-focused design employing modern cmake concepts like interface libraries are used as much as possible.

Anaconda

Dependencies not available on anaconda or conda-forge are available cryoem. The binaries are built and uploaded using conda-forge's conda-smithy. conda-smithy takes care of generating feedstocks, registering them on GitHub and online CI services and building conda recipes.

Feedstocks

Initial Setup

Maintenance

Conda-smithy Workflow

Conda smithy uses tokens to authenticate with GitHub.

Conda-smithy commands:

1 conda create -n smithy conda-smithy 2 conda activate smithy 3 conda smithy init <recipe_directory> 4 conda smithy register-github <feedstock_directory> --organization cryoem 5 conda smithy register-ci --organization cryoem --without-azure --without-drone 6 conda smithy rerender --no-check-uptodate

TODO

- ABI compat, gcc dual ABI interface

Continuous Integration

GitHub webhooks are setup to send notifications to blake. Blake forwards those to three build machines, although only Linux is sufficient. Linux runs the server that drives the the Jenkins jobs.

- Binary builds on local build machines.

Manually triggered by including "[ci build]" anywhere in the last commit message. Manually triggered builds on master branch are uploaded as continuous builds and builds triggered from any other branch are uploaded to testing area.

Triggered by cron builds daily.

- Any branch in the form of "release-" triggers continuous builds without having to include "[ci build]" in the commit message. Once the release branch is ready, release binaries are manually copied from cont. builds folder into the release folder on the server.

- CI configurations files:

JenkinsCI: Jenkinsfile

- Secrets like ssh keys are stored locally in Jenkins

- Some env vars need to be set by agents:

- HOME_DIR, DEPLOY_PATH, PATH+EXTRA (to add miniconda to PATH).

- PATH+EXTRA is not set on win. (?)

- Now, it is set on win, too.

Launch method: via SSH Advanced: Prefix Start Agent Command: "D: && "- On windows for sh calls in jenkins to work "Git for Windows" might need to be installed.

Jenkins Setup

Jenkins run command(?)

Server on Linux, agents on Linux, Mac and Windows

Jobs:

- multi: Triggers job cryoem-eman2 on agents

- cryoem-eman2: Test(?) and binary builds

- eman-dev(?): Triggers new build of eman-dev

Jenkins Setup on Linux

Credentials

PATH

plugins(?)

Under Construction

Jenkins Setup

- Triggers

GitHub webhooks

- Cron

- Binary build trigger

- Jenkins master needs PATH prepended with $CONDA_PREFIX/bin

- Jenkins Docker image, docker-coompose or docker stack deploy

- docker-compose.yml at home dir in build machines

- plugins

- config, jcasc, config.xml, users.xml, jobs/*.xml?, gpg encrypt

- Agent nodes setup, agent nodes auto-start

- Server and agent per machine vs single server and os agents

- Master only

- Master and agent per machine

- Single master and OS agents

Linux

- systemctl

docker run -d -u root -p 8080:8080 -p 50000:50000 --restart unless-stopped -v /home/eman2/jenkins_home:/var/jenkins_home -v /var/run/docker.sock:/var/run/docker.sock jenkins/jenkins:lts &

docker run -d -u root --name jenkins-master -p 8080:8080 -p 50000:50000 --restart unless-stopped -v /home/eman2/jenkins_home:/var/jenkins_home -v /var/run/docker.sock:/var/run/docker.sock -e PLUGINS_FORCE_UPGRADE=true -e TRY_UPGRADE_IF_NO_MARKER=true --restart unless-stopped cryoem/jenkins:dev

cron:

0 0 * * * bash /home/eman2/workspace/cronjobs/cleanup_harddisk.sh

$ cat Desktop/docker.txt docker run -p 8080:8080 -p 50000:50000 jenkins/jenkins:lts docker run -p 8080:8080 -p 50000:50000 --restart unless-stopped jenkins/jenkins:lts docker run -p 8080:8080 -p 50000:50000 --restart unless-stopped -v /home/eman2/jenkins_home:/var/jenkins_home jenkins/jenkins:lts docker run -p 8080:8080 -p 50000:50000 --restart unless-stopped -v /var/jenkins_home:/home/eman2/jenkins_home jenkins/jenkins:lts

# Working docker run -u root -p 8080:8080 -p 50000:50000 --restart unless-stopped -v /home/eman2/jenkins_home:/var/jenkins_home jenkins/jenkins:lts docker run -u root -p 8080:8080 -p 50000:50000 --restart unless-stopped -v /home/eman2/jenkins_home:/var/jenkins_home jenkins

docker run -d -u root -p 8080:8080 -p 50000:50000 --restart unless-stopped -v /home/eman2/jenkins_home:/var/jenkins_home -v /var/run/docker.sock:/var/run/docker.sock jenkins/jenkins:lts

sudo docker run -it -v /var/jenkins_home:/home/eman2/jenkins_home jenkins

startup: right-click ???

Mac

- plist

docker run -d --name jenkins-master -p 8080:8080 -p 50000:50000 -v /Users/eman/workspace/jenkins_home:/var/jenkins_home --restart unless-stopped jenkins/jenkins:lts

Auto startup: plist https://imega.club/2015/06/01/autostart-slave-jenkins-mac/ /Users/eman/Library/LaunchAgents

slave clock sync https://blog.shameerc.com/2017/03/quick-tip-fixing-time-drift-issue-on-docker-for-mac docker run --rm --privileged alpine hwclock -s

client 0 free swap space

$ cat Desktop/docker.txt docker run -p 8080:8080 -v /Users/eman/workspace/jenkins_home:/var/jenkins_home jenkins docker run -it -p 8080:8080 -v /Users/eman/workspace/jenkins_home:/var/jenkins_home --restart unless-stopped jenkins

# Working docker run -it -p 8080:8080 -v /Users/eman/workspace/jenkins_home:/var/jenkins_home --restart unless-stopped jenkins/jenkins:lts

# Blue Ocean docker run \

- -u root \ --rm \ -d \ -p 8080:8080 \ -v jenkins-data:/var/jenkins_home \ -v /var/run/docker.sock:/var/run/docker.sock \ jenkinsci/blueocean

# Latest docker run -d --name jenkins-master -p 8080:8080 -p 50000:50000 -v /Users/eman/workspace/jenkins_home:/var/jenkins_home --restart unless-stopped jenkins/jenkins:lts

FROM jenkins/jenkins:lts COPY plugins.txt /usr/share/jenkins/ref/plugins.txt RUN /usr/local/bin/install-plugins.sh < /usr/share/jenkins/ref/plugins.txt

plugins.txt: ace-editor:latest bouncycastle-api:latest branch-api:latest chef-identity:latest

Settings: tokens slaves

Windows

Move jenkins_home http://tech.nitoyon.com/en/blog/2014/02/25/jenkins-home-win/

Run as service: Open Task Manager(Ctrl+Shift+Esc), New task, Browse to agent.jnlp and run as admin does this work? This is when starting via Web Launcher doesn't work.

currently, task scheduler works need to have miniconda pn path, set it during miniconda installation, but do not(?) register python.

While installing miniconda register python and add to PATH. Then, conda init in cmd (git init cmd.exe) and git windows (git init bash). And, maybe restart???

BUG: miniconda3 conda-build=3.17.8 adds vc14 even if python2 is requested in build reqs

OPENGL: https://github.com/conda/conda-recipes/blob/master/qt5/notes.md

Distribution

Binaries on cryoem.bcm.edu

EMAN2 on anaconda.org

Under Construction

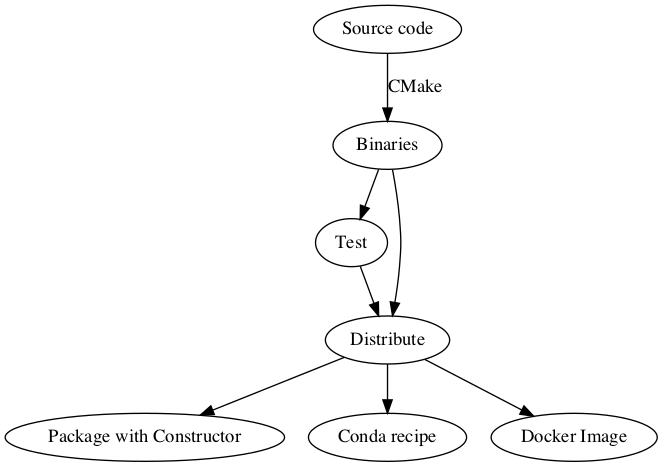

EMAN2 is built with conda-build using binaries from https://anaconda.org, packaged into an installer with constructor as of v2.2.

conda is the package manager.

https://anaconda.org is the online repository of binaries.

conda-build is the tool to build from source.

constructor is the tool to package eman2 and dependency binaries into a single installer file.

EMAN2 is distributed as a single installer which includes all its dependencies. However, EMAN2 is not available as a conda-package on https://anaconda.org. In other words it is not possible to install EMAN2 by typing conda install eman2.

Conda

Packages that are available on https://anaconda.org can be installed into any conda environment by issuing the command conda install <package>. Conda installs the package along with its dependencies. In order for packages to benefit from this automation, they need to be packaged in a specific way. That can be done with conda-build. conda-build builds packages according to instructions provided in a recipe. A recipe consists of a file with package metadata, meta.yaml, and any other necessary resources like build scripts, (build.sh, bld.bat), patches and so on.

Recipes, Feedstocks and anaconda.org channel: cryoem

Most of EMAN2 dependencies can be found on anaconda's channels, defaults and conda-forge. A few that do not exist or need to be customized have been built and uploaded to channel cryoem. The recipes are hosted in separate repositories on GitHub. Every recipe repository follows the feedstock approach of conda-forge. See here for a complete list.

Build Strategies

It is possible to utilize conda for building and installing EMAN2 in a few ways. One way is to just install binaries with conda and point to the right locations of dependencies during cmake configuration. Another way is to make use of the newly added features in EMAN2's cmake which find the dependencies automatically. These features are activated only when the build is performed by conda-build. CMake knows the build is a conda-build build only through an environment variable. So, it is possible to set the specific environment variable manually and still activate those features without actually using conda-build. Third way is to use a recipe to run conda-build. Basic instructions for all three strategies follow.

1. Use conda for binaries only

- Install dependencies

Manually with

conda install <package>

Or with a single command

conda install eman-deps -c cryoem -c defaults -c conda-forge

Build and install EMAN2 manually into home directory with cmake, make and make install.

Resulting installation is under $HOME/EMAN2 by default.

This is detailed on EMAN WIKI. Also, see build_no_envars.sh.

2. Use conda to install EMAN2 into a conda environment

- Install dependencies

Manually with

conda install <package>

Or with a single command

conda install eman-deps -c cryoem -c defaults -c conda-forge

Set environment variables.

export CONDA_BUILD_STATE=BUILD export PREFIX=<path-to-anaconda-installation-directory> # $HOME/miniconda2/ or $HOME/anaconda2/ export SP_DIR=$PREFIX/lib/python2.7/site-packages

Build and install EMAN2 manually into conda environment with cmake, make and make install. See build_with_envars.sh.

Resulting installation is under Anaconda/Miniconda installation, $HOME/anaconda2/ or $HOME/miniconda2/ by default.

3. Use recipe for a fully automated conda build

conda build <path-to-eman-recipe-directory> conda install eman2 --use-local -c cryoem -c defaults -c conda-forge

Resulting installation is under Anaconda/Miniconda installation, $HOME/anaconda2/ or $HOME/miniconda2/ by default.

:TODO: Advantages/disadvantages/comparison of the strategies.

Tests

The build strategies described in section Build Strategies are tested on CI (Continuous Integration) servers for MacOSX (TravisCI) and Linux (CircleCI). For Windows (Appveyor), only the recipe strategy is tested. The tests are triggered for every commit that is pushed to GitHub.

Local Build Tests and Automated Daily Snapshot Binaries with Jenkins

:TODO: Jenkins.

Binary Distribution

Constructor

Packaging is done with constructor, a tool for making installers from conda packages. In order to slightly customize the installers the project was forked. The customized project is at https://github.com/cryoem/constructor. The input files for constructor are maintained at https://github.com/cryoem/docker-images https://github.com/cryoem/build-scripts.

The installer has additional tools like conda, conda-build, pip bundled. The installer is setup so that the packages are kept in the installed EMAN2 conda environment cache for convenience.

Build Machines

The binary packages are built on three physical machines. The operating systems are Mac OSX 10.10, CentOS 7 and Windows 10. CentOS 6 binaries are built in a CentOS 6 docker container on the CentOS 7 machine. Various build related scripts are on https://github.com/cryoem/build-scripts.

Cron

:TODO:

Windows

[Windows]

Docker

Docker images and helper scripts are at https://github.com/cryoem/docker-images https://github.com/cryoem/build-scripts.

Command to run docker with GUI support, CentOS7:

xhost + local:root docker run -it -v /tmp/.X11-unix:/tmp/.X11-unix -e DISPLAY=unix$DISPLAY cryoem/eman-nvidia-cuda8-centos7 # When done with eman xhost - local:root

:FIXME: Runs as root on Linux. chown doesn't work, the resulting installer has root ownership.